“The Nobel Prize in Physics and Neural Networks” is not a super-fact but just what I consider interesting information

The Nobel Prizes are in the process of being announced. The Nobel Prize in Physiology or Medicine, Chemistry, Physics and Literature have been announced and the Nobel Prize in Peace will be coming up at any minute. The Nobel Prize in Economics will be announced October 14.

The Nobel Prize in Peace tends to get the most attention but personally I focus more on the Nobel Prizes in the sciences. That may be because of my biases, but those prizes also tend to be more clearcut and rarely politized. Nobel Prize in Peace is announced and given in Oslo, Norway, and all the other prizes are announced and given in Stockholm, Sweden.

Nobel Prize In Physics

What I wanted to talk about here is the Nobel Prize in Physics given to John J. Hopfield and Geoffrey J. Hinton. They made a number of important discoveries in the field of Artificial Intelligence, more specifically neural networks. This is really computer science, not physics. However, they used tools and models from physics to create their networks and algorithms, which is why the Nobel committee deemed it fit to give them the Nobel Prize in Physics.

Perhaps we need another Nobel Prize for computer science. It is also of interest to me because I’ve created and used various Neural Networks myself. It was not part of my research or part of my job, so I am not an expert. For all of you who are interested in ChatGPT, it consists of a so-called deep learning neural network (multiple hidden layers) containing 176 billion neurons. By the way that is more than the 100 billion neurons in the human brain. But OK, they aren’t real neurons.

So, what is an artificial neural network?

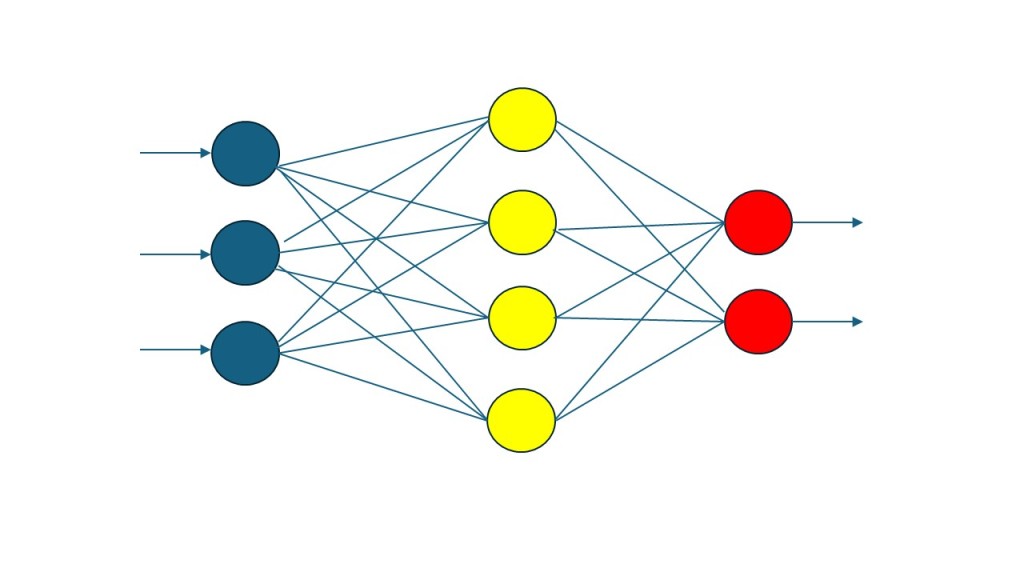

The first neural networks created by Frank Rosenblatt in 1957 looked like the one above. You had input neurons and output neurons connected via weights that you adjusted using an algorithm. In the case above you have three inputs (2, 0, 3) and these inputs are multiplied by the weights to the outputs.

3 X 0.2 +0 + 2 X -0.25 = 0.1 and 3 X 0.4 + 0 + 2 X 0.1 = 1.4 and then each output node has a threshold function yielding outputs 0 and 1.

To train the network you create a set of inputs and the output that you want for each input. You pick some random weights and then you can calculate the total error you get, and you use the error to calculate a new set of weights. You do this over and over until you get the output you want for the different inputs. The amazing thing is that now the neural network will often also give you the desired output for an input that you have not used in the training. Unfortunately, these neural networks weren’t very good, and they often failed and could not even be trained.

In 1985/1986, Geoffrey Hinton, David Rumelhart and Ronald J. Williams presented an algorithm applied to a neural network featuring a hidden layer that was very successful. It was effective and guaranteed to learn patterns that were possible to learn. It set off a revolution in Neural Networks. The next year, in 1987, when I was a college student, I used that algorithm on a neural network featuring a hidden layer to do simple OCR (optical character recognition).

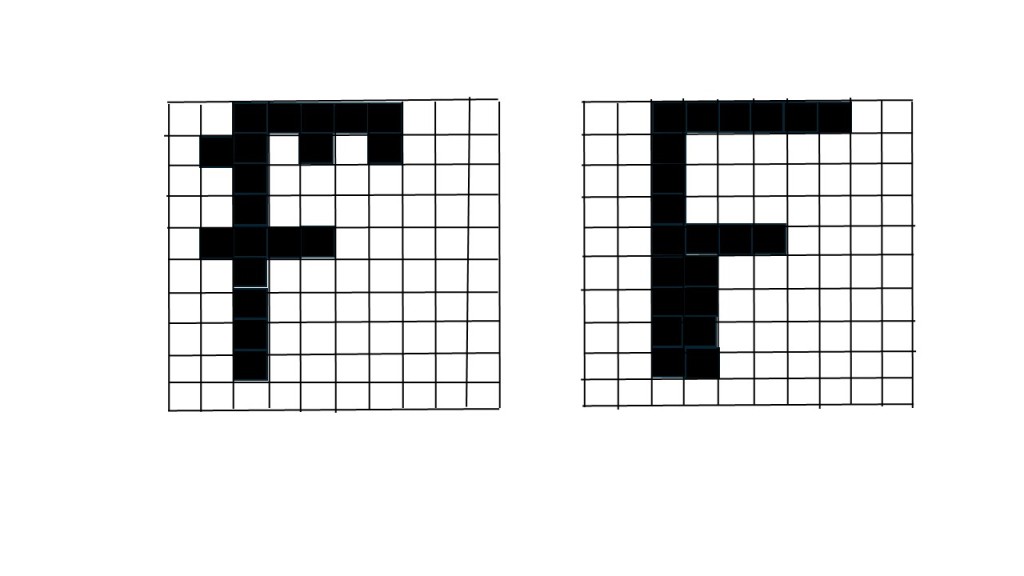

Note that a computer reading an image with a letter is very different from someone typing it on a keyboard. In the case of the image, you must use OCR, a complicated and smart algorithm for the computer to know which letter it is.

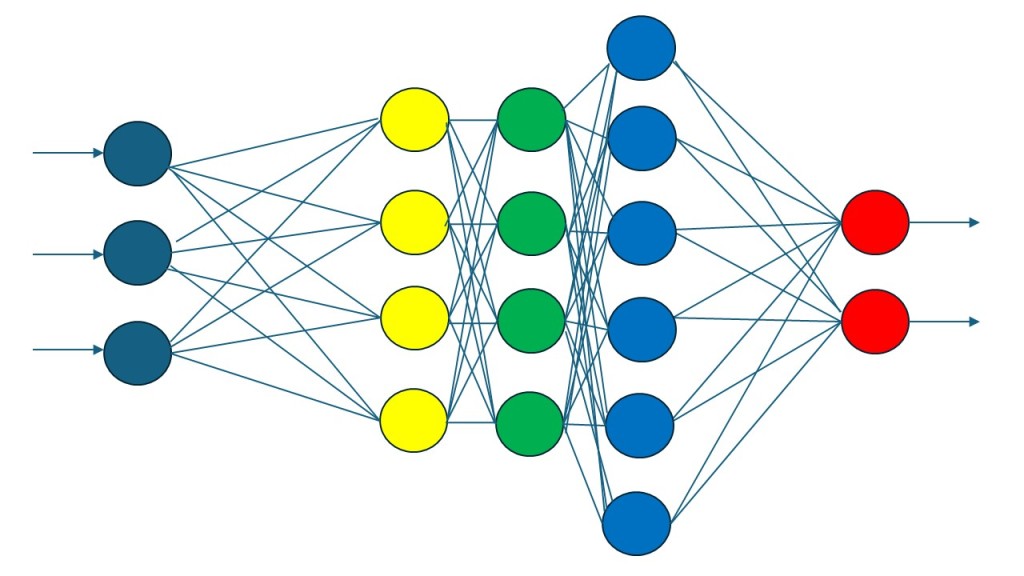

In the network above you use the errors in a similar fashion to the above to adjust the weights to get the output you want, but the algorithm, the backpropagation algorithm is very successful.

Below I am showing two 10 X 10 pixel images containing the letter F. The neural network I created had 100 inputs, one for each pixel, a hidden layer and then output neurons corresponding to each letter I wanted to read. I think I used about 10 or 20 versions of each letter during training, by which I mean running the algorithm to adjust the weights to minimize the error until it is almost gone.

Now if I used an image with a letter that I had never used before, the neural network typically got it right even though the image was new. Note, my experiment took place in 1987. OCR has come a long way since then.

At first, it was believed that adding more than one hidden layer did not add much. That was until it was discovered that by applying the backpropagation algorithm differently to different layers created a better / smarter neural network and so at the beginning of this century the deep learning neural network was born (or just deep learning AI). Our Nobel Prize winner Geoffrey J. Hinton was a pioneer in deep learning neural networks.

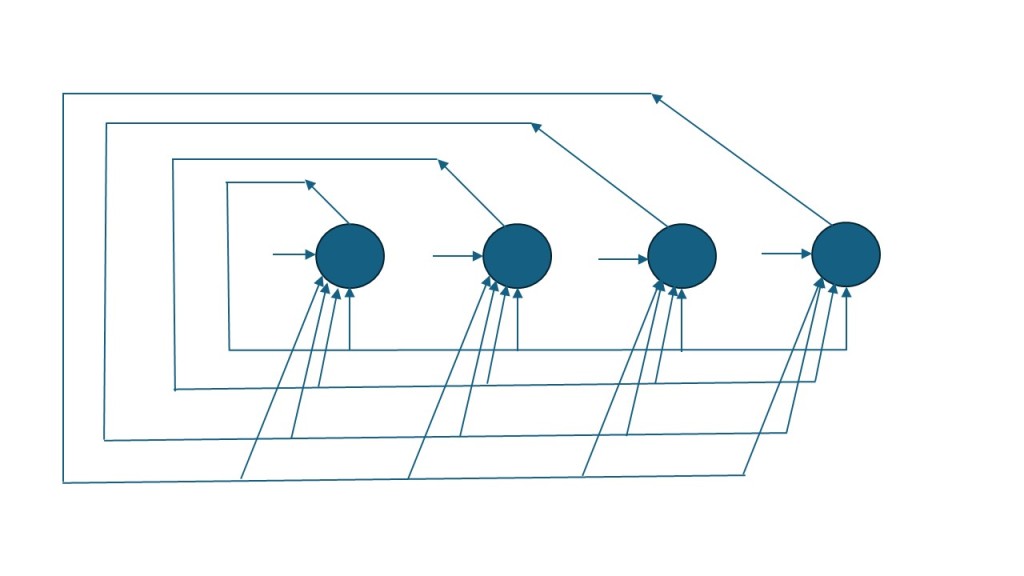

I should mention that there are many styles of neural networks, not just the ones I’ve shown here. Below is a network called a Hopfield network (it was certainly not the only thing he discovered).

For your information, ChatGPT-3.5 is a deep learning neural network like the one in my colorful picture above, but instead of 3 hidden layers it has 96 hidden layers in its neural network and instead of 19 neurons it has a total of 176 billion neurons. Congratulations to John J. Hopfield and Geoffrey J. Hinton.

I can’t say I understand all of this, but it is fascinating. Thanks, Thomas 🙂

LikeLiked by 2 people

Thank you so much Denise. It is a little bit of computer nerd stuff, but a couple of prominent computer scientists got the Nobel Prize.

LikeLike